DorisDB vs ClickHouse SSB对比测试

TL;DR

- 进行本次测试时对DorisDB了解甚微

- 本次测试由于服务器资源有限, 没有严格遵循单一变量原则进行测试

- 本次测试有一定参考意义

数据导入速度

- ClickHouse: 3500s

- DorisDB: 5160s

数据压缩情况(通过磁盘占用空间比较)

- ClickHouse: 85.2G

- DorisDB: 132G

查询速度

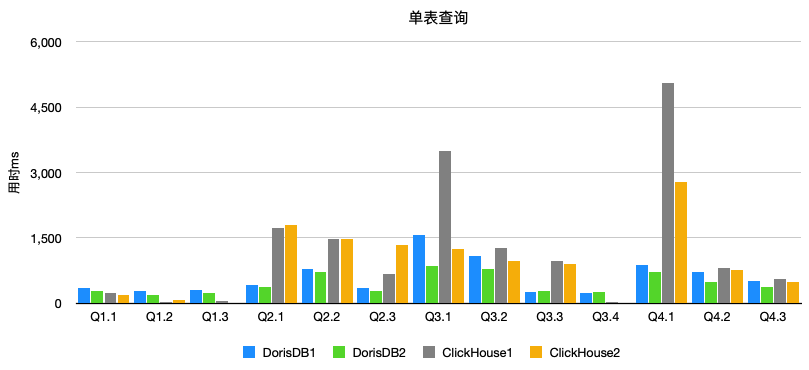

单表查询

| DorisDB1 | DorisDB2 | ClickHouse1 | ClickHouse2 | |

|---|---|---|---|---|

| Q1.1 | 350 | 290 | 226 | 195 |

| Q1.2 | 270 | 190 | 34 | 63 |

| Q1.3 | 310 | 240 | 43 | 22 |

| Q2.1 | 410 | 370 | 1,723 | 1,791 |

| Q2.2 | 780 | 720 | 1,463 | 1,470 |

| Q2.3 | 340 | 280 | 659 | 1,337 |

| Q3.1 | 1,560 | 860 | 3,488 | 1,254 |

| Q3.2 | 1,080 | 790 | 1,272 | 966 |

| Q3.3 | 250 | 290 | 979 | 889 |

| Q3.4 | 230 | 260 | 36 | 20 |

| Q4.1 | 870 | 720 | 5,067 | 2,791 |

| Q4.2 | 720 | 490 | 804 | 752 |

| Q4.3 | 510 | 380 | 561 | 482 |

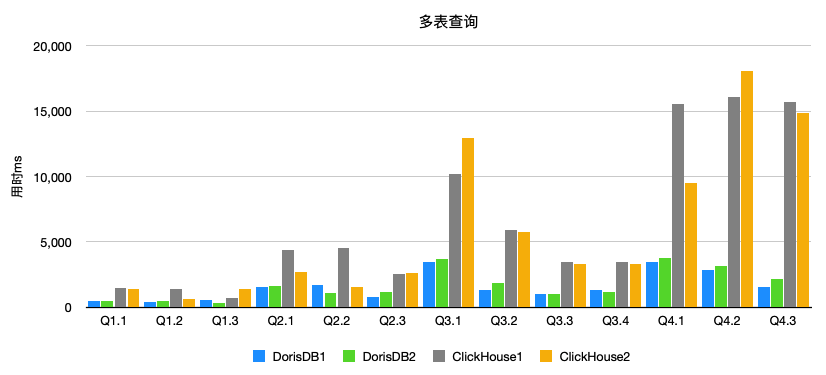

多表查询

| DorisDB1 | DorisDB2 | ClickHouse1 | ClickHouse2 | |

|---|---|---|---|---|

| Q1.1 | 450 | 490 | 1,496 | 1,424 |

| Q1.2 | 410 | 450 | 1,366 | 659 |

| Q1.3 | 510 | 340 | 678 | 1,377 |

| Q2.1 | 1,560 | 1,600 | 4,360 | 2,667 |

| Q2.2 | 1,690 | 1,060 | 4,498 | 1,554 |

| Q2.3 | 780 | 1,150 | 2,569 | 2,577 |

| Q3.1 | 3,480 | 3,700 | 10,190 | 12,960 |

| Q3.2 | 1,320 | 1,850 | 5,926 | 5,743 |

| Q3.3 | 1,030 | 1,040 | 3,445 | 3,300 |

| Q3.4 | 1,330 | 1,170 | 3,455 | 3,330 |

| Q4.1 | 3,480 | 3,750 | 15,560 | 9,494 |

| Q4.2 | 2,830 | 3,170 | 16,109 | 18,048 |

| Q4.3 | 1,560 | 2,140 | 15,685 | 14,838 |

环境信息

ClickHouse: 3台 华为云ECS 高性能计算型 | h3.xlarge.2 | 4vCPUs | 8GB | 超高IO SSD

DorisDB: 3台 华为云ECS 高性能计算型 | h3.2xlarge.4 | 8vCPUs | 32GB | 超高IO SSD

由于资源紧张, DorisDB所在服务器上还部署了rc mysql, 但过年期间无人使用, 实际可用内存16G.

DorisDB: DorisDB-SE-1.12.1 3Fe 3Be, fe和be部署在一起

ClickHouse: 20.10.3.30, 三分片两副本混合部署, 部署方法详见ClickHouse集群多实例部署

注意

实际数据导入后DorsiDB和ClickHouse除lineorder_flat外数据无任何差异

lineorder_flat:

- DorsiDB: 546669614

- ClickHouse: 622259902

DorisDB

部署略

构建数据

首先下载ssb-poc工具包并编译

1 | wget http://dorisdb-public.oss-cn-zhangjiakou.aliyuncs.com/ssb-poc-0.9.zip |

所有相关工具安装到output目录。

进入output目录,生成数据

1 | cd output |

建表

修改配置文件conf/doris.conf,指定脚本操作的Doris集群地址

1 | # for mysql cmd |

执行脚本建表

1 | bin/create_db_table.sh ddl_100 |

我这里建表跑建表脚本报错了, 改为手动建表, 参考http://doc.dorisdb.com/2146807

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

`lo_orderkey` int(11) NOT NULL COMMENT "",

`lo_linenumber` int(11) NOT NULL COMMENT "",

`lo_custkey` int(11) NOT NULL COMMENT "",

`lo_partkey` int(11) NOT NULL COMMENT "",

`lo_suppkey` int(11) NOT NULL COMMENT "",

`lo_orderdate` int(11) NOT NULL COMMENT "",

`lo_orderpriority` varchar(16) NOT NULL COMMENT "",

`lo_shippriority` int(11) NOT NULL COMMENT "",

`lo_quantity` int(11) NOT NULL COMMENT "",

`lo_extendedprice` int(11) NOT NULL COMMENT "",

`lo_ordtotalprice` int(11) NOT NULL COMMENT "",

`lo_discount` int(11) NOT NULL COMMENT "",

`lo_revenue` int(11) NOT NULL COMMENT "",

`lo_supplycost` int(11) NOT NULL COMMENT "",

`lo_tax` int(11) NOT NULL COMMENT "",

`lo_commitdate` int(11) NOT NULL COMMENT "",

`lo_shipmode` varchar(11) NOT NULL COMMENT ""

) ENGINE=OLAP

DUPLICATE KEY(`lo_orderkey`)

COMMENT "OLAP"

DISTRIBUTED BY HASH(`lo_orderkey`) BUCKETS 96

PROPERTIES (

"replication_num" = "1",

"colocate_with" = "group1",

"in_memory" = "false",

"storage_format" = "DEFAULT"

);

CREATE TABLE IF NOT EXISTS `customer` (

`c_custkey` int(11) NOT NULL COMMENT "",

`c_name` varchar(26) NOT NULL COMMENT "",

`c_address` varchar(41) NOT NULL COMMENT "",

`c_city` varchar(11) NOT NULL COMMENT "",

`c_nation` varchar(16) NOT NULL COMMENT "",

`c_region` varchar(13) NOT NULL COMMENT "",

`c_phone` varchar(16) NOT NULL COMMENT "",

`c_mktsegment` varchar(11) NOT NULL COMMENT ""

) ENGINE=OLAP

DUPLICATE KEY(`c_custkey`)

COMMENT "OLAP"

DISTRIBUTED BY HASH(`c_custkey`) BUCKETS 12

PROPERTIES (

"replication_num" = "1",

"colocate_with" = "groupa2",

"in_memory" = "false",

"storage_format" = "DEFAULT"

);

CREATE TABLE IF NOT EXISTS `dates` (

`d_datekey` int(11) NOT NULL COMMENT "",

`d_date` varchar(20) NOT NULL COMMENT "",

`d_dayofweek` varchar(10) NOT NULL COMMENT "",

`d_month` varchar(11) NOT NULL COMMENT "",

`d_year` int(11) NOT NULL COMMENT "",

`d_yearmonthnum` int(11) NOT NULL COMMENT "",

`d_yearmonth` varchar(9) NOT NULL COMMENT "",

`d_daynuminweek` int(11) NOT NULL COMMENT "",

`d_daynuminmonth` int(11) NOT NULL COMMENT "",

`d_daynuminyear` int(11) NOT NULL COMMENT "",

`d_monthnuminyear` int(11) NOT NULL COMMENT "",

`d_weeknuminyear` int(11) NOT NULL COMMENT "",

`d_sellingseason` varchar(14) NOT NULL COMMENT "",

`d_lastdayinweekfl` int(11) NOT NULL COMMENT "",

`d_lastdayinmonthfl` int(11) NOT NULL COMMENT "",

`d_holidayfl` int(11) NOT NULL COMMENT "",

`d_weekdayfl` int(11) NOT NULL COMMENT ""

) ENGINE=OLAP

DUPLICATE KEY(`d_datekey`)

COMMENT "OLAP"

DISTRIBUTED BY HASH(`d_datekey`) BUCKETS 1

PROPERTIES (

"replication_num" = "1",

"in_memory" = "false",

"colocate_with" = "groupa3",

"storage_format" = "DEFAULT"

);

CREATE TABLE IF NOT EXISTS `supplier` (

`s_suppkey` int(11) NOT NULL COMMENT "",

`s_name` varchar(26) NOT NULL COMMENT "",

`s_address` varchar(26) NOT NULL COMMENT "",

`s_city` varchar(11) NOT NULL COMMENT "",

`s_nation` varchar(16) NOT NULL COMMENT "",

`s_region` varchar(13) NOT NULL COMMENT "",

`s_phone` varchar(16) NOT NULL COMMENT ""

) ENGINE=OLAP

DUPLICATE KEY(`s_suppkey`)

COMMENT "OLAP"

DISTRIBUTED BY HASH(`s_suppkey`) BUCKETS 12

PROPERTIES (

"replication_num" = "1",

"colocate_with" = "groupa4",

"in_memory" = "false",

"storage_format" = "DEFAULT"

);

CREATE TABLE IF NOT EXISTS `part` (

`p_partkey` int(11) NOT NULL COMMENT "",

`p_name` varchar(23) NOT NULL COMMENT "",

`p_mfgr` varchar(7) NOT NULL COMMENT "",

`p_category` varchar(8) NOT NULL COMMENT "",

`p_brand` varchar(10) NOT NULL COMMENT "",

`p_color` varchar(12) NOT NULL COMMENT "",

`p_type` varchar(26) NOT NULL COMMENT "",

`p_size` int(11) NOT NULL COMMENT "",

`p_container` varchar(11) NOT NULL COMMENT ""

) ENGINE=OLAP

DUPLICATE KEY(`p_partkey`)

COMMENT "OLAP"

DISTRIBUTED BY HASH(`p_partkey`) BUCKETS 12

PROPERTIES (

"replication_num" = "1",

"colocate_with" = "groupa5",

"in_memory" = "false",

"storage_format" = "DEFAULT"

);

CREATE TABLE IF NOT EXISTS `lineorder_flat` (

`LO_ORDERKEY` int(11) NOT NULL COMMENT "",

`LO_ORDERDATE` date NOT NULL COMMENT "",

`LO_LINENUMBER` tinyint(4) NOT NULL COMMENT "",

`LO_CUSTKEY` int(11) NOT NULL COMMENT "",

`LO_PARTKEY` int(11) NOT NULL COMMENT "",

`LO_SUPPKEY` int(11) NOT NULL COMMENT "",

`LO_ORDERPRIORITY` varchar(100) NOT NULL COMMENT "",

`LO_SHIPPRIORITY` tinyint(4) NOT NULL COMMENT "",

`LO_QUANTITY` tinyint(4) NOT NULL COMMENT "",

`LO_EXTENDEDPRICE` int(11) NOT NULL COMMENT "",

`LO_ORDTOTALPRICE` int(11) NOT NULL COMMENT "",

`LO_DISCOUNT` tinyint(4) NOT NULL COMMENT "",

`LO_REVENUE` int(11) NOT NULL COMMENT "",

`LO_SUPPLYCOST` int(11) NOT NULL COMMENT "",

`LO_TAX` tinyint(4) NOT NULL COMMENT "",

`LO_COMMITDATE` date NOT NULL COMMENT "",

`LO_SHIPMODE` varchar(100) NOT NULL COMMENT "",

`C_NAME` varchar(100) NOT NULL COMMENT "",

`C_ADDRESS` varchar(100) NOT NULL COMMENT "",

`C_CITY` varchar(100) NOT NULL COMMENT "",

`C_NATION` varchar(100) NOT NULL COMMENT "",

`C_REGION` varchar(100) NOT NULL COMMENT "",

`C_PHONE` varchar(100) NOT NULL COMMENT "",

`C_MKTSEGMENT` varchar(100) NOT NULL COMMENT "",

`S_NAME` varchar(100) NOT NULL COMMENT "",

`S_ADDRESS` varchar(100) NOT NULL COMMENT "",

`S_CITY` varchar(100) NOT NULL COMMENT "",

`S_NATION` varchar(100) NOT NULL COMMENT "",

`S_REGION` varchar(100) NOT NULL COMMENT "",

`S_PHONE` varchar(100) NOT NULL COMMENT "",

`P_NAME` varchar(100) NOT NULL COMMENT "",

`P_MFGR` varchar(100) NOT NULL COMMENT "",

`P_CATEGORY` varchar(100) NOT NULL COMMENT "",

`P_BRAND` varchar(100) NOT NULL COMMENT "",

`P_COLOR` varchar(100) NOT NULL COMMENT "",

`P_TYPE` varchar(100) NOT NULL COMMENT "",

`P_SIZE` tinyint(4) NOT NULL COMMENT "",

`P_CONTAINER` varchar(100) NOT NULL COMMENT ""

) ENGINE=OLAP

DUPLICATE KEY(`LO_ORDERKEY`)

COMMENT "OLAP"

DISTRIBUTED BY HASH(`LO_ORDERKEY`) BUCKETS 192

PROPERTIES (

"replication_num" = "1",

"colocate_with" = "groupxx1",

"in_memory" = "false",

"storage_format" = "DEFAULT"

);连接一个fe执行以上sql即可

导入数据

DorisDB测试中使用python脚本导入数据, 需要安装pymysql库

使用Stream load导入单表数据

1 | (myrecover) [root@bj2-mysql-rc-drill-02 output]# time bin/stream_load.sh data_dir |

插入数据到宽表lineorder_flat

1 | (myrecover) [root@bj2-mysql-rc-drill-02 output]# time bin/flat_insert.sh |

有一个不理解的现象是, flat_insert.sh已经执行完毕, 但是查看lineorder_flat表行数时, 发现其值是在不断增大

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

+-----------+

| count(*) |

+-----------+

| 182256332 |

+-----------+

1 row in set (0.10 sec)

mysql> select count(*) from lineorder_flat;

+-----------+

| count(*) |

+-----------+

| 364316982 |

+-----------+

1 row in set (0.16 sec)

mysql> select count(*) from lineorder_flat;

+-----------+

| count(*) |

+-----------+

| 546669614 |

+-----------+

1 row in set (0.41 sec)

可以看到DorisDB数据导入耗时约86分钟

最终数据

1 | mysql> select count(*) from customer ; |

数据占用空间

1 | du -sh /data/DorisDB-SE-1.12.1/be/storage/data/ |

单表查询测试

1 | set global parallel_fragment_exec_instance_num = 4; |

Q1.1

1 | SELECT sum(lo_extendedprice * lo_discount) AS `revenue` |

Q1.2

1 | SELECT sum(lo_extendedprice * lo_discount) AS revenue FROM lineorder_flat |

Q1.3

1 | SELECT sum(lo_extendedprice * lo_discount) AS revenue |

Q2.1

1 | SELECT sum(lo_revenue), year(lo_orderdate) AS year, p_brand |

Q2.2

1 | SELECT |

Q2.3

1 | SELECT sum(lo_revenue), year(lo_orderdate) AS year, p_brand |

Q3.1

1 | SELECT c_nation, s_nation, year(lo_orderdate) AS year, sum(lo_revenue) AS revenue FROM lineorder_flat |

Q3.2

1 | SELECT c_city, s_city, year(lo_orderdate) AS year, sum(lo_revenue) AS revenue |

Q3.3

1 | SELECT c_city, s_city, year(lo_orderdate) AS year, sum(lo_revenue) AS revenue |

Q3.4

1 | SELECT c_city, s_city, year(lo_orderdate) AS year, sum(lo_revenue) AS revenue |

Q4.1

在DorisDB测试文档中对该SQL执行前执行了

1 | set vectorized_engine_enable = FALSE; |

但实际发现执行上面语句后反而会慢很多

1 | SELECT year(lo_orderdate) AS year, c_nation, sum(lo_revenue - lo_supplycost) AS profit FROM lineorder_flat |

1 | set vectorized_engine_enable = TRUE; |

我比对了开启和关闭vectorized_engine_enable后的查询结果, 发现是一样的, 这里我就不明白为啥要设置vectorized_engine_enable为False了

Q4.2

1 | SELECT year(lo_orderdate) AS year, |

Q4.3

1 | SELECT year(lo_orderdate) AS year, s_city, p_brand, |

多表关联测试

执行

1 | set global parallel_fragment_exec_instance_num = 8; |

Q1.1

1 | select sum(lo_revenue) as revenue |

Q1.2

1 | select sum(lo_revenue) as revenue |

Q1.3

1 | select sum(lo_revenue) as revenue |

Q2.1

1 | select sum(lo_revenue) as lo_revenue, d_year, p_brand |

Q2.2

1 | select sum(lo_revenue) as lo_revenue, d_year, p_brand |

Q2.3

1 | select sum(lo_revenue) as lo_revenue, d_year, p_brand |

Q3.1

1 | select c_nation, s_nation, d_year, sum(lo_revenue) as lo_revenue |

Q3.2

1 | select c_city, s_city, d_year, sum(lo_revenue) as lo_revenue |

Q3.3

1 | select c_city, s_city, d_year, sum(lo_revenue) as lo_revenue |

Q3.4

1 | select c_city, s_city, d_year, sum(lo_revenue) as lo_revenue |

Q4.1

1 | select d_year, c_nation, sum(lo_revenue) - sum(lo_supplycost) as profit |

Q4.2

1 | select d_year, s_nation, p_category, sum(lo_revenue) - sum(lo_supplycost) as profit |

Q4.3

1 | select d_year, s_city, p_brand, sum(lo_revenue) - sum(lo_supplycost) as profit |

ClickHouse

构建数据

1 | git clone https://github.com/vadimtk/ssb-dbgen.git |

建表

1 | CREATE DATABASE ssb on cluster ck_cluster; |

导入数据

1 | cd ssb-dbgen |

Converting “star schema” to denormalized “flat schema”:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90在一个节点执行

set max_bytes_before_external_group_by=2000000000;

set max_memory_usage=4000000000;

CREATE TABLE lineorder_flat_tmp

ENGINE = MergeTree

PARTITION BY toYear(LO_ORDERDATE)

ORDER BY (LO_ORDERDATE, LO_ORDERKEY) AS

SELECT

l.LO_ORDERKEY AS LO_ORDERKEY,

l.LO_LINENUMBER AS LO_LINENUMBER,

l.LO_CUSTKEY AS LO_CUSTKEY,

l.LO_PARTKEY AS LO_PARTKEY,

l.LO_SUPPKEY AS LO_SUPPKEY,

l.LO_ORDERDATE AS LO_ORDERDATE,

l.LO_ORDERPRIORITY AS LO_ORDERPRIORITY,

l.LO_SHIPPRIORITY AS LO_SHIPPRIORITY,

l.LO_QUANTITY AS LO_QUANTITY,

l.LO_EXTENDEDPRICE AS LO_EXTENDEDPRICE,

l.LO_ORDTOTALPRICE AS LO_ORDTOTALPRICE,

l.LO_DISCOUNT AS LO_DISCOUNT,

l.LO_REVENUE AS LO_REVENUE,

l.LO_SUPPLYCOST AS LO_SUPPLYCOST,

l.LO_TAX AS LO_TAX,

l.LO_COMMITDATE AS LO_COMMITDATE,

l.LO_SHIPMODE AS LO_SHIPMODE,

c.C_NAME AS C_NAME,

c.C_ADDRESS AS C_ADDRESS,

c.C_CITY AS C_CITY,

c.C_NATION AS C_NATION,

c.C_REGION AS C_REGION,

c.C_PHONE AS C_PHONE,

c.C_MKTSEGMENT AS C_MKTSEGMENT,

s.S_NAME AS S_NAME,

s.S_ADDRESS AS S_ADDRESS,

s.S_CITY AS S_CITY,

s.S_NATION AS S_NATION,

s.S_REGION AS S_REGION,

s.S_PHONE AS S_PHONE,

p.P_NAME AS P_NAME,

p.P_MFGR AS P_MFGR,

p.P_CATEGORY AS P_CATEGORY,

p.P_BRAND AS P_BRAND,

p.P_COLOR AS P_COLOR,

p.P_TYPE AS P_TYPE,

p.P_SIZE AS P_SIZE,

p.P_CONTAINER AS P_CONTAINER

FROM lineorder AS l

INNER JOIN customer AS c ON c.C_CUSTKEY = l.LO_CUSTKEY

INNER JOIN supplier AS s ON s.S_SUPPKEY = l.LO_SUPPKEY

INNER JOIN part AS p ON p.P_PARTKEY = l.LO_PARTKEY;

0 rows in set. Elapsed: 2086.025 sec. Processed 604.64 million rows, 26.12 GB (289.85 thousand rows/s., 12.52 MB/s.)

bj2-all-clickhouse-test-01 :) select count(*) from lineorder_flat;

SELECT count(*)

FROM lineorder_flat

┌───count()─┐

│ 600037902 │

└───────────┘

1 rows in set. Elapsed: 0.006 sec.

CREATE TABLE ssb.lineorder_flat_local on cluster ck_cluster

AS ssb.lineorder_flat_tmp

ENGINE = ReplicatedMergeTree(

'/clickhouse/ssb/tables/{layer}-{shard}/lineorder_flat',

'{replica}'

)

PARTITION BY toYear(LO_ORDERDATE)

ORDER BY (LO_ORDERDATE, LO_ORDERKEY)

CREATE TABLE ssb.lineorder_flat on cluster ck_cluster AS ssb.lineorder_flat_local ENGINE = Distributed(

ck_cluster,

ssb,

lineorder_flat_local,

rand()

);

在三个分片执行:

INSERT INTO ssb.lineorder_flat SELECT * from ssb_local.lineorder_flat_tmp;

Ok.

0 rows in set. Elapsed: 747.548 sec. Processed 600.04 million rows, 140.40 GB (802.67 thousand rows/s., 187.82 MB/s.)

clickhouse数据导入用时总计约3500秒

最终数据

1 | bj2-all-clickhouse-test-01 :) select count(*) from customer ; |

数据占用空间

1 | [root@bj2-all-clickhouse-test-01 ssb]# ll |

单表查询测试

执行

1 | set max_threads=4; |

Q1.1

1 | SELECT sum(LO_EXTENDEDPRICE * LO_DISCOUNT) AS revenue |

Q1.2

1 | SELECT sum(LO_EXTENDEDPRICE * LO_DISCOUNT) AS revenue |

Q1.3

1 | SELECT sum(LO_EXTENDEDPRICE * LO_DISCOUNT) AS revenue |

Q2.1

1 | SELECT |

Q2.2

1 | SELECT |

Q2.3

1 | SELECT |

Q3.1

1 | SELECT |

Q3.2

1 | SELECT |

Q3.3

1 | SELECT |

Q3.4

1 | SELECT |

Q4.1

1 | SELECT |

Q4.2

1 | SELECT |

Q4.3

1 | SELECT |

多表关联测试

在DorisDB vs Clickhouse SSB性能测试对比报告, 不知道是不是疏漏了, Doris在测试时设置了1

set global parallel_fragment_exec_instance_num = 8;

但没有写有没有在clickhouse测试时设置SETTINGS max_threads=8;

由于我的环境只有四个4 cpu, 所以我这里设置为4.

通过以下命令可知, lineorder每个分片数据文件大概6.4G(压缩后), 我的环境内存只有8G, 不确定能否都缓存了

这里还需要注意两个问题:

- clickhouse分布式表相比单表关联更加不友好, JOIN要改为GLOBAL JOIN

- JOIN操作时一定要把数据量小的表放在右边(这会导致SQL改写更加麻烦)

所以我对DorisDB vs Clickhouse SSB性能测试对比报告中的多表关联测试SQL进行了微调

Q1.1

1 | SELECT SUM(LO_REVENUE) AS REVENUE |

Q1.2

1 | SELECT SUM(LO_REVENUE) AS REVENUE |

Q1.3

1 | SELECT SUM(LO_REVENUE) AS REVENUE |

Q2.1

1 | SELECT SUM(LO_REVENUE) AS LO_REVENUE, D_YEAR, P_BRAND |

Q2.2

1 | SELECT SUM(LO_REVENUE) AS LO_REVENUE, D_YEAR, P_BRAND |

Q2.3

1 | SELECT |

Q3.1

1 | SELECT C_NATION, S_NATION, D_YEAR, SUM(LO_REVENUE) AS LO_REVENUE |

Q3.2

1 | SELECT C_CITY, S_CITY, D_YEAR, SUM(LO_REVENUE) AS LO_REVENUE |

Q3.3

1 | SELECT |

Q3.4

1 | SELECT |

Q4.1

1 | SELECT |

Q4.2

1 | SELECT |

Q4.3

1 | SELECT |